The views expressed here are my own and do not necessarily reflect those of Juniper Networks.

=======

May 12 2015

=======

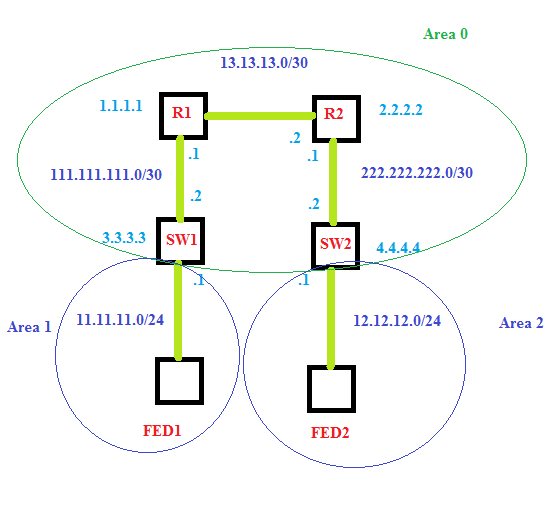

Alrighty, baseline configs are in place. I wasn’t able to use two of the vSRXs as switches as they don’t support etherswitching, but whatever. So now I have 4 routers – two vSRXs at the top connected to each other, each with a vSRX hanging off of it.

Next to configure my ports for the clients. I already have the clients IP’d:

Fed1 – 11.11.11.10

Fed2 – 12.12.12.10

Created the gateway for them as .1 for their respective network. I made a quick paint drawing: (the less art I do, the more configs I can do!)

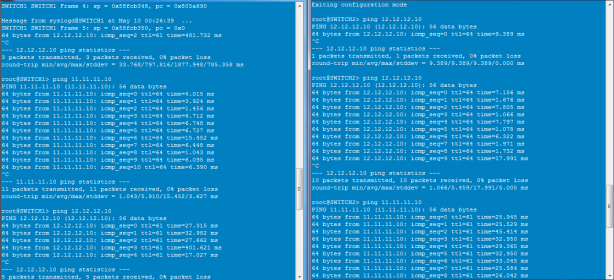

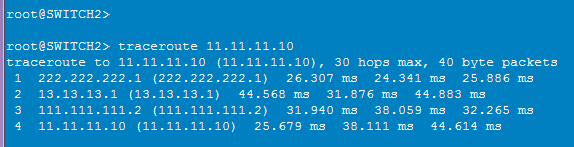

All my neighbors are up, let’s do a test from fed1 to fed2 and then run a traceroute to confirm.

Hosts are responding, so they are routing correctly. Trace looks good. Now, time to get some multicast up!

My two end hosts are fedora clients. I’ve turned off the firewalls so that I don’t have to mess with that since I am learning multicast today, not iptables.

First, I am going to make fed2 my multicast source. Iperf will run multicast, so I have to install that on the hosts. How hard could it be, right? Oh yeah, I forgot to configure DNS for the hosts – got to do that (needed to run yum/wget on linux). OK – still not getting out to the net. Ah! No default route. Ok, default route is there but still no connectivity?!! The connectivity runs through my SRX100H, and guess what, it doesn’t know about 11.11.11.10/12.12.12.10 and probably won’t NAT it either. Time to fix that!

IDP still enabled on the SRX100H, and the license is expired, time to kill that. Annnnd, now the box is running slow as dirt. Can’t catch a break here. All because I want to get iperf installed. Time to sit back for a bit, wait for things to process, and try again in a few. Nope, I am pretty sure I locked it up. Cool thing is that transit traffic is still working as the wife says her internet is still up! Ah well. Tonight I will add the statics on the network for 11.11.11.10/12.12.12.10 to point to their respective routers and get iperf installed.

Scratch that, after about 30 minutes, things settled down on the SRX100H and I am back in, whew! Added the static routes, deleted the idp and utm features from my TRUSTED-LAN zone on the SRX, and committed. Patience this time – I will give it time to process everything and come back before going further.

And finally!

[root@localhost ~]# ping 8.8.8.8

PING 8.8.8.8 (8.8.8.8) 56(84) bytes of data.

64 bytes from 8.8.8.8: icmp_seq=1 ttl=51 time=28.0 ms

64 bytes from 8.8.8.8: icmp_seq=2 ttl=51 time=28.2 ms

Let’s install Iperf now.

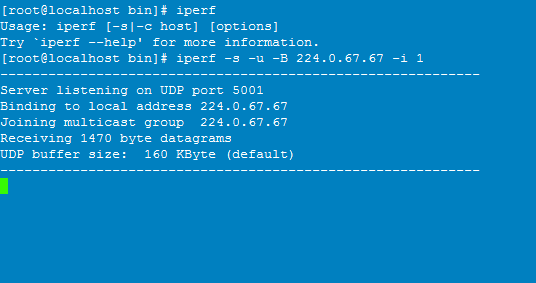

Multicast up! Oh yeah…super excited right?

Real quick breakdown

- iperf – command to execute the tool

- s – Turns iperf into server mode, and I want it acting as the requester for the 224.0.67.67 stream.

- u – Specified UDP to be used.

- B – Bind the server to the host

- i – Interval in seconds it sends data out.

Let’s see if the vSRX is going to see the connection.

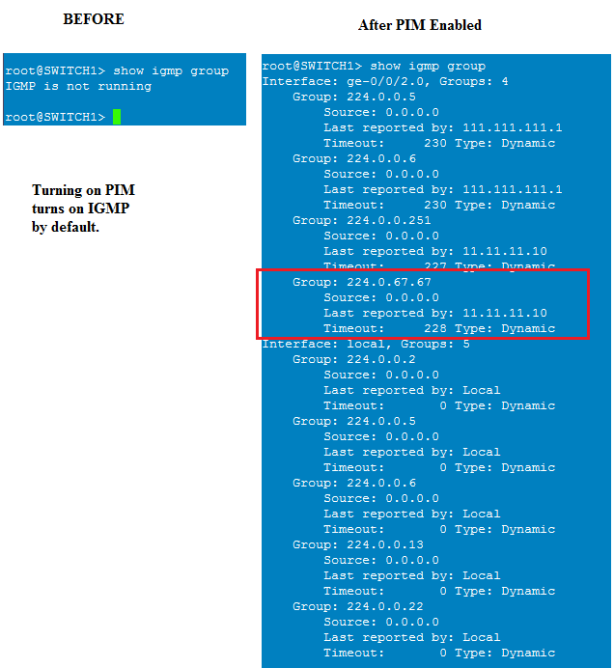

I configure PIM just for the host facing port, here is the before and after:

set protocols pim interface ge-0/0/2.0

So, some of these look familiar, right? 224.0.0.5/6 are for OSPF and 224.0.67.67 is our stream we setup of FED1. SO what about 224.0.0.251? Looks like it originates from the client as well. Quick search turns up Multicast DNS (mDNS) – cool.

If we look at the local group, we have .5/.6 again for OSPF, but how about .2/.13/.22? 224.0.0.13 I remember from reading is the ALL-PIM-ROUTER address.

- 224.0.0.2 – multicast to all routers on this subnet

- 224.0.0.22 – IGMP

- 224.0.0.13 – PIM

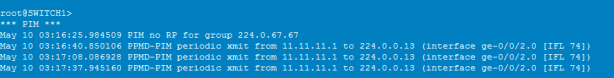

Everything accounted for, good. One last thing, how about traceoptions on PIM? Let’s see what is going on under the hood.

set protocols pim traceoptions file PIM set protocols pim traceoptions flag all

I’ve turned off the feed request from FED1 so that I can see the whole conversation. Time to run the monitor command:

Pretty boring. Minute I started the request up, you see the first message. Then, all you see is the router saying hi to the PIM multicast address. Let’s turn up the next interface for PIM going towards Router1.

Awww yeah. Lots of data. Had to start it over since my first capture didn’t have it all.

Here is the file from the capture: Multicast PIM Neighbord Add

Keep in mind, the multicast source is turned off still at this point, this is just me adding the interface to the upstream router to PIM.

May 10 03:30:53.400093 EVENT:PIF_UP pif: ge-0/0/1.0

So, interface is up in PIM.

May 10 03:30:53.400193 EVENT:NBR_ADD pif - ge-0/0/1.0 nbr - 111.111.111.2

Adds itself as a neighbor into PIM. It also sets itself as the DR.

May 10 03:30:53.400281 Nbr 111.111.111.2 on ge-0/0/1.0 is up May 10 03:30:53.400365 task_timer_delete: PIM.master_Initial Hello May 10 03:30:53.400467 PIM interface ge-0/0/1.0 state elect DR

And really, all we are doing here is setting up PIM neighbors, one for each interface.

May 10 03:30:53.606105 EVENT:NBR_ADD pif - ge-0/0/2.0 nbr - 111.111.111.2 May 10 03:30:53.607718 EVENT:NBR_ADD pif - ge-0/0/1.0 nbr - 11.11.11.1

So, what happens we add a feed? Nothing, since there are no joins going on, both PIM buddies are just sitting there saying hello.

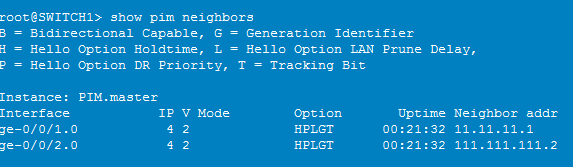

Here they are, just chillin:

So, I am going to go ahead and get Router1, Router2, and Switch2 setup for PIM. All interfaces between FED1 and FED2 are now in PIM so they should be able to talk. Now the issue is, how do I get FED2 to send the data? Iperf to the rescue again.

==================

May 13, 2015

==================

So, I spent a good bit of time trying to get iperf and vlc running to simulate multicast, but decided on just iperf.

So, now the clients are setup like this:

Requester Fed1 – iperf -s -u -B 224.0.0.67 -i 1

Source Fed2 – iperf -c 224.0.67.67 -u –ttl 5 -t 5

You will note the iperf flags -s = server and -c = client. It just happens that client in this case is sending the data, so is actually the source for the multicast data.

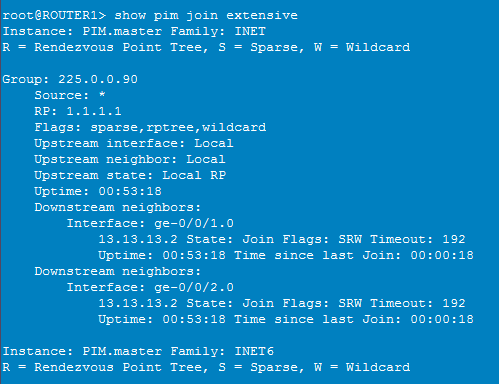

Yesterday I also setup the rest of the PIM interfaces and established Router 1 as the RP.

Router1:

set protocols pim rp local address 1.1.1.1 set protocols pim interface ge-0/0/1.0 set protocols pim interface ge-0/0/2.0

Rest of the PIM enabled routers:

set protocols pim rp static address 1.1.1.1 set protocols pim interface ge-0/0/2.0 set protocols pim interface ge-0/0/1.0

I was also able to get the static join working. On Switch2, I put this in:

set protocols igmp interface ge-0/0/1.0 static group 225.0.0.90

As you can see, before I turn on iperf for the multicast, the RP, Router1, sees the static join for 225.0.0.90:

Cool, right? Let’s turn on iperf and watch it actually get a fulfillment of the stream!

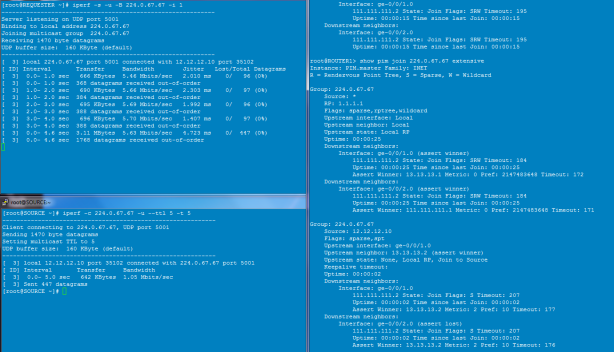

So on the left, we have the requester (Fed1 – top) and the source (Fed2 – bottom). I initiated the commands to get iperf started. On the right is Router1. You get your first (*.G) showing a request for any source to fill a requirement for 224.0.67.67.

Remember that upstream is towards the source, which is interface ge-0/0/1 which is towards Router2 and ultimately the source Fed2.

You will noticed we have two downstream neighbors – both Switch 1 and Router 2 have PIM enabled so they are also downstream neighbors.

Turned on traceoptions and started the process again. With just the server running, you don’t see much as with multicast the fun begins when the client wants the data.

Turned on the client on Fed2 and the log starts going bonkers:

First, the RP gets the message and starts a resolve query. In this case, the query is for 224.0.67.67 from client 12.12.12.10 via interface ge-0/0/2 which is the right direction since it came from Router2.

May 11 02:46:02.920657 EVENT:RSLV_REQ Addr: 224.0.67.67.12.12.12.10 On interface - ge-0/0/2.0(p)

Then the RP asserts the path for the stream, saying the best path for the group 224.0.67.67 is through interface ge-0/0/2 via the IP 111.111.111.1

ASSERT-FSM:ge-0/0/2.0(111.111.111.1):NOINFO->WINNER for 224.0.67.67.0.0.0.0 metric 0 pref 0x80000000

May 11 02:46:02.921065 PIM resolving request for Group 224.0.67.67 Source 12.12.12.10 Interface ge-0/0/1.0

Next we get the actual join process event:

May 11 02:46:02.958339 EVENT:DS_JOIN US-FSM: US Event - JOIN_DESIRED_TRUE Current State - NOT_JOINED New State - JOINED US-FSM: SENDING TRIGGER JOIN US-FSM: SENDING PERIODIC JOIN EVENT:US_STATE_JOINED sg state: 224.0.67.67.12.12.12.10 Flags-sm:

The state joined is what you likely see when you are running the show pim join 224.0.67.67 extensive.

And finally, after I’ve done transferring data a prune message is sent.

May 11 02:48:56.578282 EVENT:DS_ASSERT_PRUNE EVENT(DS_ASSERT_PRUNE): if ge-0/0/1.0(p) Grp 224.0.67.67 Src (null) Assoc(*,g): 224.0.67.67.0.0.0.0 Flags-sm:wc:rpt:

Now that the basics are in place I can do some more reading. I might dig into some more traceoptions and see what happens when I take a interface out of PIM as well.

For now, that is my intro to Multicast!